KVM部署、使用、调优

背景介绍

云计算不等于虚拟化

虚拟化是技术

云计算是资源使用交付模式

应用虚拟化:

比如你没安装xshell,但是你可以点这个xshell图标,调用程序,这就是应用虚拟化的作用

应用虚拟化可能做好的xenapp

互联网中服务器虚拟化应用最多。

另外网络IO也是半虚拟化好

kvm支持超配(虚拟出多个cpu)

xen不支持超配,你买vps的时候,对方说它们是基于xen的。其实意思就是不是属于超配的那种

学习基础环境搭建可以参照这篇链接

http://www.cnblogs.com/nmap/p/6368157.html

kvm学习开始

先查看服务器cpu是否支持kvm虚拟机。有下面信息就表示支持了

|

1

2

3

4

5

6

|

[root@data-1-1 ~]# grep -E 'vmx|svm' /proc/cpuinfoflags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts mmx fxsr sse sse2 ss ht syscall nx rdtscp <br>lm constant_tsc arch_perfmon pebs bts nopl xtopology tsc_reliable nonstop_tsc <br>aperfmperf pni pclmulqdq vmx ssse3 cx16 pcid sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx hypervisor lahf_lm ida arat epb pln pts dtherm tpr_shadow vnmi ept vpid tsc_adjustflags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts mmx fxsr sse sse2 ss ht syscall nx rdtscp <br>lm constant_tsc arch_perfmon pebs bts nopl xtopology tsc_reliable nonstop_tsc <br>aperfmperf pni pclmulqdq vmx ssse3 cx16 pcid sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx hypervisor lahf_lm ida arat epb pln pts dtherm tpr_shadow vnmi ept vpid tsc_adjustflags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts mmx fxsr sse sse2 ss ht syscall nx rdtscp <br>lm constant_tsc arch_perfmon pebs bts nopl xtopology tsc_reliable nonstop_tsc <br>aperfmperf pni pclmulqdq vmx ssse3 cx16 pcid sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx hypervisor lahf_lm ida arat epb pln pts dtherm tpr_shadow vnmi ept vpid tsc_adjustflags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts mmx fxsr sse sse2 ss ht syscall nx rdtscp <br>lm constant_tsc arch_perfmon pebs bts nopl xtopology tsc_reliable nonstop_tsc <br>aperfmperf pni pclmulqdq vmx ssse3 cx16 pcid sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx hypervisor lahf_lm ida arat epb pln pts dtherm tpr_shadow vnmi ept vpid tsc_adjust[root@data-1-1 ~]# |

安装kvm相关软件包

virt-install包提供virt-install工具,可以用于创建虚拟机

qemu-kvm 主要的KVM程序包

virt-manager GUI虚拟机管理工具

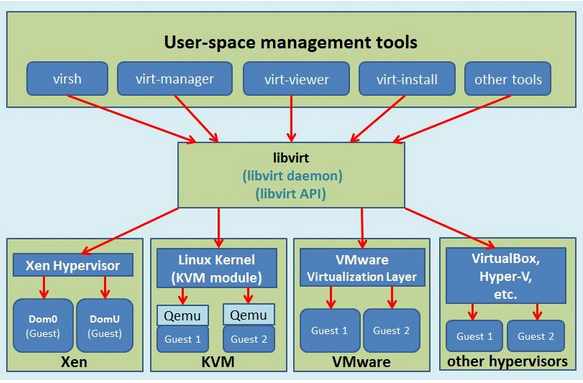

libvirt 是可底层kvm内核打交道的接口工具。用户态的所有命令都是调用了它。停止它,kvm运行正常,但是无法管理了

virt-install 基于libvirt服务的虚拟机创建命令

bridge-utils 创建和管理桥接设备的工具(安装上述包会依赖此包。自动安装上)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@data-1-1 ~]# yum -y install qemu-kvm qemu-kvm-tools virt-manager libvirt virt-installLoaded plugins: fastestmirrorLoading mirror speeds from cached hostfile * base: mirrors.163.com * extras: mirrors.163.com * updates: mirrors.163.comPackage 10:qemu-kvm-1.5.3-126.el7_3.3.x86_64 already installed and latest versionPackage 10:qemu-kvm-tools-1.5.3-126.el7_3.3.x86_64 already installed and latest versionPackage virt-manager-1.4.0-2.el7.noarch already installed and latest versionPackage libvirt-2.0.0-10.el7_3.4.x86_64 already installed and latest versionPackage virt-install-1.4.0-2.el7.noarch already installed and latest versionNothing to do[root@data-1-1 ~]# |

安装完毕通过下面命令都可以看到多出一个新的网络设备virbr0

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

[root@data-1-1 ~]# brctl showbridge name bridge id STP enabled interfacesvirbr0 8000.5254002430ec yes virbr0-nic[root@data-1-1 ~]# ifconfigeth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.145.133 netmask 255.255.255.0 broadcast 192.168.145.255 inet6 fe80::20c:29ff:fea7:1724 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a7:17:24 txqueuelen 1000 (Ethernet) RX packets 165 bytes 27580 (26.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 148 bytes 23370 (22.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 ether 52:54:00:24:30:ec txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0[root@data-1-1 ~]# |

启动libvirtd服务,这个工具作用很大

设置libvirtd服务开机启动,同时启动此服务

|

1

2

3

|

[root@data-1-1 ~]# systemctl enable libvirtd.service[root@data-1-1 ~]# systemctl start libvirtd.service[root@data-1-1 ~]# systemctl status libvirtd.service |

qemu-img工具

|

1

2

3

4

5

|

[root@data-1-1 ~]# whereis qemu-imgqemu-img: /usr/bin/qemu-img /usr/share/man/man1/qemu-img.1.gz[root@data-1-1 ~]# rpm -qf /usr/bin/qemu-imgqemu-img-1.5.3-126.el7_3.3.x86_64[root@data-1-1 ~]# |

使用qemu-img工具创建硬盘,格式,路径,多大

|

1

2

3

|

[root@data-1-1 ~]# qemu-img create -f raw /opt/CentOS-7.1-x86_64.raw 10GFormatting '/opt/CentOS-7.1-x86_64.raw', fmt=raw size=10737418240[root@data-1-1 ~]# |

准备安装kvm的系统源,这里使用iso的和宿主机的一致

|

1

2

3

4

5

6

|

[root@data-1-1 ~]# mkdir /tools[root@data-1-1 ~]# dd if=/dev/sr0 of=/tools/CentOS-7-x86_64-DVD-1503-01.iso8419328+0 records in8419328+0 records out4310695936 bytes (4.3 GB) copied, 112.997 s, 38.1 MB/s[root@data-1-1 ~]# |

关于virt-install在centos6里面virt-manager里面带的,在centos7是需要单独安装virt-install安装

|

1

2

3

|

virt-install --virt-type kvm --name CentOS-7-x86_64 --ram 2048 \--cdrom=/tools/CentOS-7-x86_64-DVD-1503-01.iso --disk path=/opt/CentOS-7.1-x86_64.raw \--network network=default --graphics vnc,listen=0.0.0.0 --noautoconsole |

执行过程如下

|

1

2

3

4

5

6

7

|

[root@data-1-1 ~]# virt-install --virt-type kvm --name CentOS-7-x86_64 --ram 2048 --cdrom=/tools/CentOS-7-x86_64-DVD-1503-01.iso --disk path=/opt/CentOS-7.1-x86_64.raw \<br>> --network network=default --graphics vnc,listen=0.0.0.0 --noautoconsoleStarting install...Creating domain... | 0 B 00:00:00 Domain installation still in progress. You can reconnect tothe console to complete the installation process.[root@data-1-1 ~]# |

提前准备好vnc工具,连接过去

选中Install CentOS7 ,按tab键,输入net.ifnames=0 biosdevname=0

下面的安装步骤和宿主机的安装基本一致。分区那里不用设置swap分区。本身就是虚拟机了,再设置swap分区,效果很差,比如阿里云就没设置swap分区

最后一步要注意,点击reboot,它其实是关机。需要手动使用virsh命令启动

virsh list可以查看虚拟机

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

[root@data-1-1 ~]# virsh list Id Name State---------------------------------------------------- 3 CentOS-7-x86_64 running[root@data-1-1 ~]# virsh list Id Name State----------------------------------------------------[root@data-1-1 ~]# virsh list --all Id Name State---------------------------------------------------- - CentOS-7-x86_64 shut off[root@data-1-1 ~]# virsh start CentOS-7-x86_64Domain CentOS-7-x86_64 started[root@data-1-1 ~]# virsh list --all Id Name State---------------------------------------------------- 4 CentOS-7-x86_64 running[root@data-1-1 ~]# |

virsh常用命令

查看处于运行状态的虚拟机

virsh list

查看宿主机上所有虚拟机(无论处于什么状态,关机,挂起等)

virsh list --all

关闭虚拟机

virsh shudown CentOS-7-x86_64(主机名)

virsh destroy CentOS-7-x86_64(主机名) 类似kill -9 进程号

启动虚拟机

virsh start CentOS-7-x86_64

删除虚拟机

virsh undefine CentOS-7-x86_64

编辑虚拟机

virsh edit CentOS-7-x86_64

挂起虚拟机

virsh suspended CentOS-7-x86_64

恢复虚拟机

virsh resume CentOS-7-x86_64

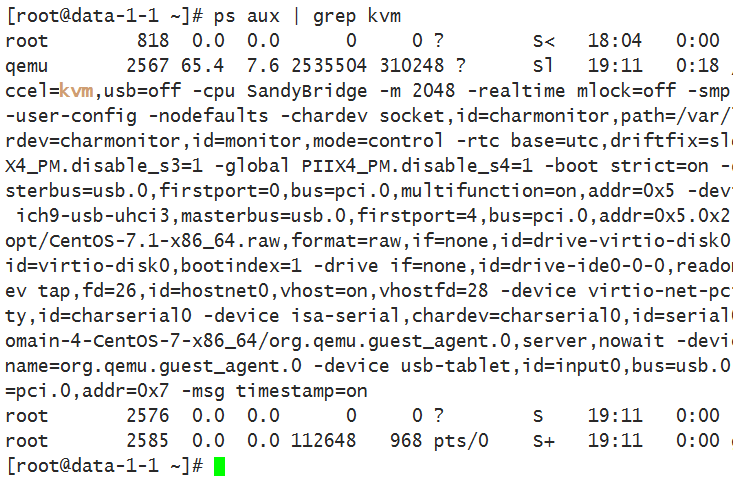

查看正在运行的虚拟机:

ps -aux |grep kvm

kvm是以进程的方式运行的。也可以kill -9 杀掉这个虚拟机

假如停止了libvirt,虚拟机还在跑,但是你无法管理它了

libvirt对虚拟机不产生任何影响,只是用来管理的

|

1

2

3

4

5

6

|

[root@data-1-1 ~]# systemctl stop libvirtd[root@data-1-1 ~]# virsh list --allerror: failed to connect to the hypervisorerror: Failed to connect socket to '/var/run/libvirt/libvirt-sock': No such file or directory[root@data-1-1 ~]# |

使用vncviewer登录虚拟机

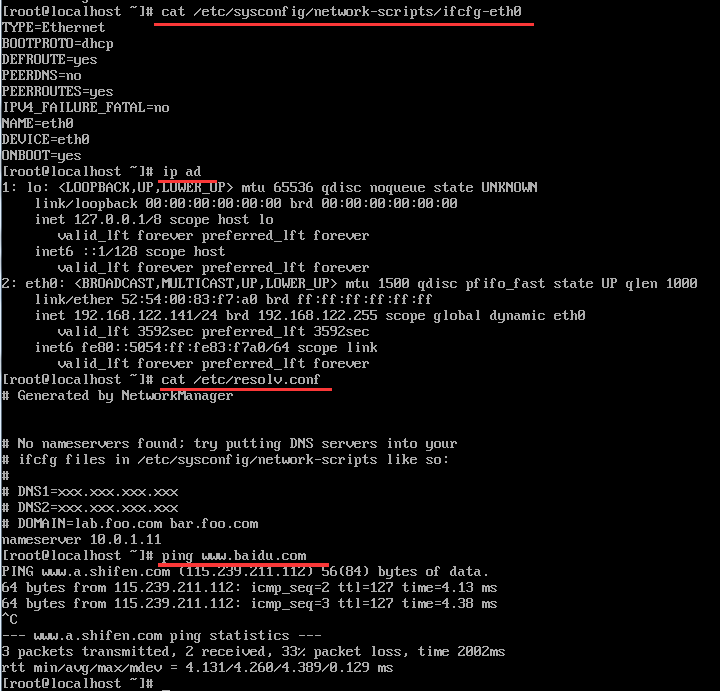

更改IP配置,去掉IPv6的配置,onboot改成yes,重启网络服务,更改resolv.conf,freedns改成no,可以ping通外网

出kvm机器安装一些工具,比如ifconfig命令找不到可以安装net-tools包,但是可以使用ip命令

yum install vim screen mtr nc nmap lrzsz openssl-devel gcc glibc gcc-c++ make zip dos2unix mysql sysstat wget rsync net-tools dstat setuptool system-config-* iptables ntsysv -y

在宿主机上查看,创建完kvm,下面路径多了一个xml的文件,是虚拟机的配置文件

|

1

2

3

4

5

6

7

|

[root@data-1-1 ~]# cd /etc/libvirt/qemu[root@data-1-1 qemu]# lltotal 4-rw------- 1 root root 3844 Feb 6 18:48 CentOS-7-x86_64.xmldrwx------. 3 root root 40 Feb 6 18:04 networks[root@data-1-1 qemu]# less CentOS-7-x86_64.xml[root@data-1-1 qemu]# |

它定义了虚拟机的软件和硬件信息,12行到13行定义了内存和cpu

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

|

[root@data-1-1 qemu]# cat CentOS-7-x86_64.xml<!--WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BEOVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh edit CentOS-7-x86_64or other application using the libvirt API.--><domain type='kvm'> <name>CentOS-7-x86_64</name> <uuid>702d4eed-7463-4ded-b8f8-a70a4f7164ce</uuid> <memory unit='KiB'>2097152</memory> <currentMemory unit='KiB'>2097152</currentMemory> <vcpu placement='static'>1</vcpu> <os> <type arch='x86_64' machine='pc-i440fx-rhel7.0.0'>hvm</type> <boot dev='hd'/> </os> <features> <acpi/> <apic/> </features> <cpu mode='custom' match='exact'> <model fallback='allow'>SandyBridge</model> </cpu> <clock offset='utc'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <pm> <suspend-to-mem enabled='no'/> <suspend-to-disk enabled='no'/> </pm> <devices> <emulator>/usr/libexec/qemu-kvm</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw'/> <source file='/opt/CentOS-7.1-x86_64.raw'/> <target dev='vda' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <target dev='hda' bus='ide'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x2'/> </controller> <controller type='pci' index='0' model='pci-root'/> <controller type='ide' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </controller> <interface type='network'> <mac address='52:54:00:83:f7:a0'/> <source network='default'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </interface> <serial type='pty'> <target port='0'/> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <graphics type='vnc' port='-1' autoport='yes' listen='0.0.0.0'> <listen type='address' address='0.0.0.0'/> </graphics> <video> <model type='cirrus' vram='16384' heads='1' primary='yes'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </video> <memballoon model='virtio'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </memballoon> </devices></domain>[root@data-1-1 qemu]# |

这里找出一些重要的说下,vnc的端口是-1 表示监听再5900端口上

|

1

|

<graphics type='vnc' port='-1' autoport='yes' listen='0.0.0.0'> |

这里是最大内存和当前内存,以及cpu数量

|

1

2

3

|

<memory unit='KiB'>2097152</memory><currentMemory unit='KiB'>2097152</currentMemory><vcpu placement='static'>1</vcpu> |

下面是硬盘路径和格式

|

1

2

|

<driver name='qemu' type='raw'/><source file='/opt/CentOS-7.1-x86_64.raw'/> |

hvm表示硬件虚拟化

|

1

|

<type arch='x86_64' machine='pc-i440fx-rhel7.0.0'>hvm</type> |

文件开头提示,你如果想编辑虚拟机配置,需要使用下面命令,不要直接修改这个文件

|

1

2

3

|

WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BEOVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh edit CentOS-7-x86_64 |

libvirt的一些重要命令

virsh --help

dumpxml参数,如果你某天把这个虚拟机删除了,通过xml文件还可以把它起来

这个参数可以导出xml文件

你要是物理删除虚拟机,那就无法恢复了,这个xml文件类似一个静态的saltstack描述文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@data-1-1 qemu]# systemctl start libvirtd[root@data-1-1 qemu]# virsh list Id Name State---------------------------------------------------- 4 CentOS-7-x86_64 running[root@data-1-1 qemu]# lltotal 4-rw------- 1 root root 3844 Feb 6 18:48 CentOS-7-x86_64.xmldrwx------. 3 root root 40 Feb 6 18:04 networks[root@data-1-1 qemu]# virsh dumpxml CentOS-7-x86_64 >kvm1.xml[root@data-1-1 qemu]# lltotal 12-rw------- 1 root root 3844 Feb 6 18:48 CentOS-7-x86_64.xml-rw-r--r-- 1 root root 4740 Feb 6 22:54 kvm1.xmldrwx------. 3 root root 40 Feb 6 18:04 networks[root@data-1-1 qemu]# |

删除虚拟机

删除虚拟机的参数可以使用undefine ,它是彻底删除的意思,如果没有备份xml配置文件,那么虚拟机无法恢复了

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@data-1-1 qemu]# virsh undefine CentOS-7-x86_64Domain CentOS-7-x86_64 has been undefined[root@data-1-1 qemu]# lltotal 8-rw-r--r-- 1 root root 4740 Feb 6 22:54 kvm1.xmldrwx------. 3 root root 40 Feb 6 18:04 networks[root@data-1-1 qemu]# virsh list Id Name State---------------------------------------------------- 4 CentOS-7-x86_64 running[root@data-1-1 qemu]# |

一旦关闭虚拟机,虚拟机就消失了

但是我们依然可以从虚拟机当前运行状态备份它的配置文件

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@data-1-1 qemu]# virsh list Id Name State---------------------------------------------------- 4 CentOS-7-x86_64 running[root@data-1-1 qemu]# virsh dumpxml CentOS-7-x86_64 >kvm2.xml[root@data-1-1 qemu]# lltotal 16-rw-r--r-- 1 root root 4740 Feb 6 22:54 kvm1.xml-rw-r--r-- 1 root root 4740 Feb 6 22:57 kvm2.xmldrwx------. 3 root root 40 Feb 6 18:04 networks[root@data-1-1 qemu]# |

关闭kvm虚拟机。

|

1

2

3

4

5

6

7

8

|

[root@data-1-1 qemu]# virsh shutdown CentOS-7-x86_64Domain CentOS-7-x86_64 is being shutdown[root@data-1-1 qemu]# virsh list --all Id Name State----------------------------------------------------[root@data-1-1 qemu]# |

从备份的配置文件恢复虚拟机

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@data-1-1 qemu]# virsh define kvm1.xmlDomain CentOS-7-x86_64 defined from kvm1.xml[root@data-1-1 qemu]# virsh list --all Id Name State---------------------------------------------------- - CentOS-7-x86_64 shut off[root@data-1-1 qemu]# virsh start CentOS-7-x86_64Domain CentOS-7-x86_64 started[root@data-1-1 qemu]# virsh list --all Id Name State---------------------------------------------------- 5 CentOS-7-x86_64 running[root@data-1-1 qemu]# |

恢复之后,配置文件也出现了

|

1

2

3

4

5

6

7

|

[root@data-1-1 qemu]# lltotal 20-rw------- 1 root root 4001 Feb 6 23:00 CentOS-7-x86_64.xml-rw-r--r-- 1 root root 4740 Feb 6 22:54 kvm1.xml-rw-r--r-- 1 root root 4740 Feb 6 22:57 kvm2.xmldrwx------. 3 root root 40 Feb 6 18:04 networks[root@data-1-1 qemu]# |

关于快照功能部分

快照配置文件在/var/lib/libvirt/qemu/snapshot/虚拟机名称/下,目前由于还没做快照,目录下没任何东西

关于快照的命令参数可以查看下面

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

[root@data-1-1 qemu]# cd /var/lib/libvirt/qemu/snapshot/[root@data-1-1 snapshot]# lltotal 0[root@data-1-1 snapshot]# virsh shutdown CentOS-7-x86_64Domain CentOS-7-x86_64 is being shutdown[root@data-1-1 snapshot]# virsh list --all Id Name State---------------------------------------------------- - CentOS-7-x86_64 shut off[root@data-1-1 snapshot]# virsh --help | grep snapshot iface-begin create a snapshot of current interfaces settings, which can be later committed (iface-commit) or restored (iface-rollback) Snapshot (help keyword 'snapshot') snapshot-create Create a snapshot from XML snapshot-create-as Create a snapshot from a set of args snapshot-current Get or set the current snapshot snapshot-delete Delete a domain snapshot snapshot-dumpxml Dump XML for a domain snapshot snapshot-edit edit XML for a snapshot snapshot-info snapshot information snapshot-list List snapshots for a domain snapshot-parent Get the name of the parent of a snapshot snapshot-revert Revert a domain to a snapshot[root@data-1-1 snapshot]# |

raw磁盘格式的虚拟机不支持快照功能

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

[root@data-1-1 snapshot]# pwd/var/lib/libvirt/qemu/snapshot[root@data-1-1 snapshot]# ls[root@data-1-1 snapshot]# virsh snapshot-create CentOS-7-x86_64error: unsupported configuration: internal snapshot for disk vda unsupported for storage type raw[root@data-1-1 snapshot]# cd /opt/[root@data-1-1 opt]# lltotal 2206412-rw-r--r-- 1 root root 10737418240 Feb 6 23:07 CentOS-7.1-x86_64.raw[root@data-1-1 opt]# qemu-img info CentOS-7.1-x86_64.rawimage: CentOS-7.1-x86_64.rawfile format: rawvirtual size: 10G (10737418240 bytes)disk size: 2.1G[root@data-1-1 opt]# |

好在我们可以转换格式

转换格式时虚拟机必须先关机

-f 源镜像的格式

-O 目标镜像的格式

转换成qcow2格式的。可以看到它是保留了原来的文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@data-1-1 opt]# qemu-img convert -f raw -O qcow2 CentOS-7.1-x86_64.raw CentOS-7.1-x86_64.qcow2[root@data-1-1 opt]# lltotal 4413264-rw-r--r-- 1 root root 2259877888 Feb 6 23:15 CentOS-7.1-x86_64.qcow2-rw-r--r-- 1 root root 10737418240 Feb 6 23:07 CentOS-7.1-x86_64.raw[root@data-1-1 opt]# qemu-img info CentOS-7.1-x86_64.qcow2image: CentOS-7.1-x86_64.qcow2file format: qcow2virtual size: 10G (10737418240 bytes)disk size: 2.1Gcluster_size: 65536Format specific information: compat: 1.1 lazy refcounts: false[root@data-1-1 opt]# |

修改配置文件的disk模块,把磁盘改成最新的qcow2的这个文件,同时格式也改成qcow2的

virsh edit CentOS-7-x86_64

|

1

2

3

4

5

6

|

<disk type='file' device='disk'> <driver name='qemu' type='raw'/> <source file='/opt/CentOS-7.1-x86_64.raw'/> <target dev='vda' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/></disk> |

修改成如下,type和source都改掉

|

1

2

3

4

5

6

|

<disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/opt/CentOS-7.1-x86_64.qcow2'/> <target dev='vda' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/></disk> |

开始做快照备份,如果不放心,可以先启动kvm,没问题后关闭再执行快照

看到下面路径下出现了快照目录和文件

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@data-1-1 opt]# virsh snapshot-create CentOS-7-x86_64Domain snapshot 1486394873 created[root@data-1-1 opt]# cd -/var/lib/libvirt/qemu/snapshot[root@data-1-1 snapshot]# pwd/var/lib/libvirt/qemu/snapshot[root@data-1-1 snapshot]# lsCentOS-7-x86_64[root@data-1-1 snapshot]# cd CentOS-7-x86_64/[root@data-1-1 CentOS-7-x86_64]# ls1486394873.xml[root@data-1-1 CentOS-7-x86_64]# |

查看快照,再次创建一个快照

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-list CentOS-7-x86_64 Name Creation Time State------------------------------------------------------------ 1486394873 2017-02-06 23:27:53 +0800 shutoff[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-create CentOS-7-x86_64Domain snapshot 1486394993 created[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-list CentOS-7-x86_64 Name Creation Time State------------------------------------------------------------ 1486394873 2017-02-06 23:27:53 +0800 shutoff 1486394993 2017-02-06 23:29:53 +0800 shutoff[root@data-1-1 CentOS-7-x86_64]# lltotal 16-rw------- 1 root root 4480 Feb 6 23:29 1486394873.xml-rw------- 1 root root 4531 Feb 6 23:29 1486394993.xml[root@data-1-1 CentOS-7-x86_64]# |

查看当前快照,可以看到当前快照是1486394993,它上一级的快照是1486394873

|

1

2

3

4

5

6

7

|

[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-current CentOS-7-x86_64<domainsnapshot> <name>1486394993</name> <state>shutoff</state> <parent> <name>1486394873</name> </parent> |

恢复到某个版本的快照状态

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-list CentOS-7-x86_64 Name Creation Time State------------------------------------------------------------ 1486394873 2017-02-06 23:27:53 +0800 shutoff 1486394993 2017-02-06 23:29:53 +0800 shutoff[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-revert CentOS-7-x86_64 1486394873[root@data-1-1 CentOS-7-x86_64]# virsh snapshot-current CentOS-7-x86_64<domainsnapshot> <name>1486394873</name> <state>shutoff</state> |

还可以通过下面方式查看快照

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[root@data-1-1 CentOS-7-x86_64]# cd /opt/[root@data-1-1 opt]# lltotal 4413396-rw-r--r-- 1 root root 2260075008 Feb 6 23:32 CentOS-7.1-x86_64.qcow2-rw-r--r-- 1 root root 10737418240 Feb 6 23:07 CentOS-7.1-x86_64.raw[root@data-1-1 opt]# qemu-img info CentOS-7.1-x86_64.qcow2image: CentOS-7.1-x86_64.qcow2file format: qcow2virtual size: 10G (10737418240 bytes)disk size: 2.1Gcluster_size: 65536Snapshot list:ID TAG VM SIZE DATE VM CLOCK1 1486394873 0 2017-02-06 23:27:53 00:00:00.0002 1486394993 0 2017-02-06 23:29:53 00:00:00.000Format specific information: compat: 1.1 lazy refcounts: false[root@data-1-1 opt]# |

删除某个快照

|

1

2

3

4

5

6

7

8

9

|

[root@data-1-1 opt]# virsh snapshot-delete CentOS-7-x86_64 1486394873Domain snapshot 1486394873 deleted[root@data-1-1 opt]# virsh snapshot-list CentOS-7-x86_64 Name Creation Time State------------------------------------------------------------ 1486394993 2017-02-06 23:29:53 +0800 shutoff[root@data-1-1 opt]# |

CPU和内存动态扩容部分

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@data-1-1 opt]# virt-install --help | grep cpu --vcpus VCPUS Number of vcpus to configure for your guest. Ex: --vcpus 5 --vcpus 5,maxcpus=10,cpuset=1-4,6,8 --vcpus sockets=2,cores=4,threads=2, --cpu CPU CPU model and features. Ex: --cpu coreduo,+x2apic --cpu host[root@data-1-1 opt]# virt-install --help | grep memoryusage: virt-install --name NAME --memory MB STORAGE INSTALL [options] --memory MEMORY Configure guest memory allocation. Ex: --memory 1024 (in MiB) --memory 512,maxmemory=1024 --memtune MEMTUNE Tune memory policy for the domain process. --memorybacking MEMORYBACKING Set memory backing policy for the domain process. Ex: --memorybacking hugepages=on[root@data-1-1 opt]# |

这里我们通过修改配置文件实现

首先需要修改支持动态更改cpu和内存

这里默认如下

|

1

2

3

|

<memory unit='KiB'>2097152</memory><currentMemory unit='KiB'>2097152</currentMemory><vcpu placement='static'>1</vcpu> |

改成下面配置,主要改cpu这里

virsh edit CentOS-7-x86_64

|

1

2

3

|

<memory unit='KiB'>2097152</memory><currentMemory unit='KiB'>2097152</currentMemory><vcpu placement='auto' current='1'>4</vcpu> |

重启kvm虚拟机

动态修改cpu

查看cpu操作相关的参数,其中有一个setvcpus

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@data-1-1 opt]# virsh --help | grep cpu cpu-baseline compute baseline CPU cpu-compare compare host CPU with a CPU described by an XML file cpu-stats show domain cpu statistics setvcpus change number of virtual CPUs vcpucount domain vcpu counts vcpuinfo detailed domain vcpu information vcpupin control or query domain vcpu affinity guestvcpus query or modify state of vcpu in the guest (via agent) cpu-models CPU models maxvcpus connection vcpu maximum nodecpumap node cpu map nodecpustats Prints cpu stats of the node.[root@data-1-1 opt]# |

kvm虚拟机的cpu个数当前是1个

|

1

2

3

4

5

6

|

[root@data-1-1 opt]# ssh root@192.168.122.141root@192.168.122.141's password:Last login: Mon Feb 6 22:28:49 2017 from 192.168.122.1[root@localhost ~]# cat /proc/cpuinfo | grep processor | wc -l1[root@localhost ~]# |

修改成2个

|

1

2

3

4

5

6

7

8

|

[root@data-1-1 opt]# virsh setvcpus CentOS-7-x86_64 2 --live[root@data-1-1 opt]# ssh root@192.168.122.141root@192.168.122.141's password:Last login: Mon Feb 6 23:52:33 2017 from gateway[root@localhost ~]# cat /proc/cpuinfo | grep processor | wc -l2[root@localhost ~]# |

动态修改cpu只有在CentOS7支持,CentOS6不支持

|

1

2

3

4

5

6

7

8

|

[root@data-1-1 opt]# virsh setvcpus CentOS-7-x86_64 2 --live[root@data-1-1 opt]# ssh root@192.168.122.141root@192.168.122.141's password:Last login: Mon Feb 6 23:52:33 2017 from gateway[root@localhost ~]# cat /proc/cpuinfo | grep processor | wc -l2[root@localhost ~]# |

另外动态修改CPU,只能动态的添加,不能动态的减少,如果要减少可以通过关闭kvm,修改配置文件操作

动态修改的东西重启失效

|

1

2

3

4

5

6

|

[root@data-1-1 opt]# virsh setvcpus CentOS-7-x86_64 3 --live[root@data-1-1 opt]# virsh setvcpus CentOS-7-x86_64 2 --liveerror: unsupported configuration: failed to find appropriate hotpluggable vcpus to reach the desired target vcpu count[root@data-1-1 opt]# |

另外,centos7版本的kvm修改后立即生效了

动态修改cpu只有centos7支持。centos6不支持

以前版本需要手动让新加的cpu启用

|

1

2

3

4

5

6

7

8

|

[root@data-1-1 ~]# virsh setvcpus CentOS-7-x86_64 2 --live[root@data-1-1 ~]# ssh root@192.168.122.141root@192.168.122.141's password:Last login: Tue Feb 7 00:09:34 2017 from gateway[root@localhost ~]# cat /sys/devices/system/cpu/cpu1/online1[root@localhost ~]# |

也可以通过下面方式不登录kvm虚拟机查看cpu个数

|

1

2

3

4

|

[root@data-1-1 ~]# virsh dominfo CentOS-7-x86_64 | grep CPUCPU(s): 1CPU time: 23.8s[root@data-1-1 ~]# |

动态修改内存

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

[root@data-1-1 ~]# virsh qemu-monitor-command CentOS-7-x86_64 --hmp --cmd balloon 1512[root@data-1-1 ~]# ssh root@192.168.122.141root@192.168.122.141's password:Last login: Tue Feb 7 00:10:45 2017 from gateway[root@localhost ~]# free -m total used free shared buff/cache availableMem: 1464 95 1248 8 121 1238Swap: 0 0 0[root@localhost ~]# exitlogoutConnection to 192.168.122.141 closed.[root@data-1-1 ~]# virsh qemu-monitor-command CentOS-7-x86_64 --hmp --cmd balloon600[root@data-1-1 ~]# ssh root@192.168.122.141root@192.168.122.141's password:Last login: Tue Feb 7 00:12:53 2017 from gateway[root@localhost ~]# free -m total used free shared buff/cache availableMem: 552 95 335 8 121 326Swap: 0 0 0[root@localhost ~]# |

也可以不用登陆查看kvm内存

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@data-1-1 ~]# virsh qemu-monitor-command CentOS-7-x86_64 --hmp --cmd balloon 800[root@data-1-1 ~]# virsh dominfo CentOS-7-x86_64 | grep memoryMax memory: 2097152 KiBUsed memory: 819200 KiB[root@data-1-1 ~]# virsh qemu-monitor-command CentOS-7-x86_64 --hmp --cmd balloon 1800[root@data-1-1 ~]# virsh dominfo CentOS-7-x86_64 | grep memoryMax memory: 2097152 KiBUsed memory: 1843200 KiB[root@data-1-1 ~]# |

另一种修改kvm内存的方式,另外修改内存不能超过最大内存

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@data-1-1 ~]# virsh setmem CentOS-7-x86_64 748288[root@data-1-1 ~]# virsh dominfo CentOS-7-x86_64 | grep memoryMax memory: 2097152 KiBUsed memory: 748288 KiB[root@data-1-1 ~]# virsh setmem CentOS-7-x86_64 1748288[root@data-1-1 ~]# virsh dominfo CentOS-7-x86_64 | grep memoryMax memory: 2097152 KiBUsed memory: 1748288 KiB[root@data-1-1 ~]# virsh setmem CentOS-7-x86_64 2748288error: invalid argument: cannot set memory higher than max memory[root@data-1-1 ~]# virsh dominfo CentOS-7-x86_64 | grep memoryMax memory: 2097152 KiBUsed memory: 1748288 KiB[root@data-1-1 ~]# |

在规划是考虑到想要热添加,当时装虚拟机的时候,就要考虑这个问题,设置max内存和cpu

关于硬盘扩容部分,虽然可以resize增大,但是有丢失数据的风险,生产中不推荐使用

几种磁盘格式比较

1. raw

raw格式是最简单,什么都没有,所以叫raw格式。连头文件都没有,就是一个直接给虚拟机进行读写的文件。raw不支持动态增长空间,必须一开始就指定空间大小。所以相当的耗费磁盘空间。但是对于支持稀疏文件的文件系统(如ext4)而言,这方面并不突出。ext4下默认创建的文件就是稀疏文件,所以不要做什么额外的工作。用

du -sh 文件名

可以查看文件的实际大小。也就是说,不管磁盘空间有多大,运行下面的指令没有任何问题:

qemu-img create -f raw test.img 10000G

raw镜像格式是虚拟机种I/O性能最好的一种格式,大家在使用时都会和raw进行参照,性能越接近raw的越好。但是raw没有任何其他功能。对于稀疏文件的出现,像qcow这一类的运行时分配空间的镜像就没有任何优势了。

2. cow

cow格式和raw一样简单,也是创建时分配所有空间,但cow有一个bitmap表记录当前哪些扇区被使用,所以cow可以使用增量镜像,也就是说可以对其做外部快照。但cow也没有其他功能,其特点就是简单。

3. qcow

qcow在cow的基础上增加了动态增加文件大小的功能,并且支持加密,压缩。qcow通过2级索引表来管理整个镜像的空间分配,其中第二级的索引用了内存cache技术,需要查找动作,这方面导致性能的损失。qcow现在基本不用,一方面其优化和功能没有qcow2好,另一方面,读写性能又没有cow和raw好。

4. qcow2

qcow2是集各种技术为一体的超级镜像格式,支持内部快照,加密,压缩等一系列功能,访问性能也在不断提高。但qcow2的问题就是过于臃肿,把什么功能都集于一身。镜像小的原因是镜像文件只保存改变的部分,原来的文件被锁住了。

qcow2格式,类似虚拟机的瘦模式,虽然划分10GB,但是不是立即占完的,用多少占多少

另外

假如你有几百GB的数据,不建议放在kvm里面,

IO慢,kvm迁移也麻烦

KVM的网络部分

启动kvm虚拟机,会多出一个vnet0网络设备,这是虚拟启动后生成的。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

[root@data-1-1 ~]# ifconfigeth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.145.133 netmask 255.255.255.0 broadcast 192.168.145.255 inet6 fe80::20c:29ff:fea7:1724 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a7:17:24 txqueuelen 1000 (Ethernet) RX packets 289558 bytes 327309816 (312.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 86615 bytes 17569530 (16.7 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 4 bytes 1844 (1.8 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 4 bytes 1844 (1.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0virbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 ether 52:54:00:24:30:ec txqueuelen 0 (Ethernet) RX packets 45935 bytes 4046581 (3.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 84374 bytes 315862341 (301.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0vnet0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::fc54:ff:fe83:f7a0 prefixlen 64 scopeid 0x20<link> ether fe:54:00:83:f7:a0 txqueuelen 500 (Ethernet) RX packets 464 bytes 49210 (48.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1356 bytes 104195 (101.7 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

kvm虚拟机启动后,vnet0默认桥接到了virbr0上,关闭后消失

|

1

2

3

4

5

6

7

8

9

10

11

|

[root@data-1-1 ~]# brctl showbridge name bridge id STP enabled interfacesvirbr0 8000.5254002430ec yes virbr0-nic vnet0[root@data-1-1 ~]# virsh shutdown CentOS-7-x86_64Domain CentOS-7-x86_64 is being shutdown[root@data-1-1 ~]# brctl showbridge name bridge id STP enabled interfacesvirbr0 8000.5254002430ec yes virbr0-nic[root@data-1-1 ~]# |

此时的kvm虚拟机出去的流量是警告桥接到virbr0上,然后经过宿主机的iptables的nat,再经过eth0出去

这就有网络瓶颈了,而且依靠iptables,如果你把iptables关闭无法上网了。同时ip地址是地址池中分配的内网地址

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@data-1-1 ~]# iptables -t nat -vnLChain PREROUTING (policy ACCEPT 203 packets, 16415 bytes) pkts bytes target prot opt in out source destination Chain INPUT (policy ACCEPT 34 packets, 6320 bytes) pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 24 packets, 4644 bytes) pkts bytes target prot opt in out source destination Chain POSTROUTING (policy ACCEPT 24 packets, 4644 bytes) pkts bytes target prot opt in out source destination 0 0 RETURN all -- * * 192.168.122.0/24 224.0.0.0/24 0 0 RETURN all -- * * 192.168.122.0/24 255.255.255.255 0 0 MASQUERADE tcp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 169 10095 MASQUERADE udp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 0 0 MASQUERADE all -- * * 192.168.122.0/24 !192.168.122.0/24[root@data-1-1 ~]# |

kvm获取的地址来源于下面

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

[root@data-1-1 ~]# ps aux | grep dnsnobody 1343 0.0 0.0 15544 964 ? S Feb06 0:00 /sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/libvirt_leaseshelperroot 1344 0.0 0.0 15516 300 ? S Feb06 0:00 /sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/libvirt_leaseshelperroot 7701 0.0 0.0 112648 968 pts/0 S+ 00:36 0:00 grep --colour=auto dns[root@data-1-1 ~]# cat /var/lib/libvirt/dnsmasq/default.conf##WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE##OVERWRITTEN AND LOST. Changes to this configuration should be made using:## virsh net-edit default## or other application using the libvirt API.#### dnsmasq conf file created by libvirtstrict-orderpid-file=/var/run/libvirt/network/default.pidexcept-interface=lobind-dynamicinterface=virbr0dhcp-range=192.168.122.2,192.168.122.254dhcp-no-overridedhcp-lease-max=253dhcp-hostsfile=/var/lib/libvirt/dnsmasq/default.hostsfileaddn-hosts=/var/lib/libvirt/dnsmasq/default.addnhosts[root@data-1-1 ~]# |

生产中,网络这块改动如下:

一般是添加一个网络设备桥

把宿主机的网卡桥接到这上面

删除原先宿主机的IP地址

把宿主机的地址配置到这个网络设备桥上

最后修改kvm配置文件,让kvm也桥接到这个网络设备桥上

|

1

2

3

4

5

6

7

8

9

10

|

[root@data-1-1 ~]# brctl showbridge name bridge id STP enabled interfacesvirbr0 8000.5254002430ec yes virbr0-nic vnet0[root@data-1-1 ~]# brctl addbr br0[root@data-1-1 ~]# brctl showbridge name bridge id STP enabled interfacesbr0 8000.000000000000 no virbr0 8000.5254002430ec yes virbr0-nic vnet0 |

下面操作过程中会断网。我们可以写成脚本形式执行

操作如下

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@data-1-1 ~]# cd /tools[root@data-1-1 tools]# vim edit-net.sh[root@data-1-1 tools]# cat edit-net.shbrctl addif br0 eth0ip addr del dev eth0 192.168.145.133/24ifconfig br0 192.168.145.133/24 uproute add default gw 192.168.145.2[root@data-1-1 tools]# sh edit-net.sh &[1] 8311[root@data-1-1 tools]#[1]+ Done sh edit-net.sh[root@data-1-1 tools]# |

可以看到eth0上就没有ip地址了,同时eth0桥接到了br0上了

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

[root@data-1-1 tools]# brctl showbridge name bridge id STP enabled interfacesbr0 8000.000c29a71724 no eth0virbr0 8000.5254002430ec yes virbr0-nic vnet0[root@data-1-1 tools]# ifconfigbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.145.133 netmask 255.255.255.0 broadcast 192.168.145.255 inet6 fe80::20c:29ff:fea7:1724 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a7:17:24 txqueuelen 0 (Ethernet) RX packets 38 bytes 2676 (2.6 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 35 bytes 3654 (3.5 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::20c:29ff:fea7:1724 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a7:17:24 txqueuelen 1000 (Ethernet) RX packets 293183 bytes 327693627 (312.5 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 89134 bytes 18395706 (17.5 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 4 bytes 1844 (1.8 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 4 bytes 1844 (1.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0virbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 ether 52:54:00:24:30:ec txqueuelen 0 (Ethernet) RX packets 46059 bytes 4057533 (3.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 84485 bytes 315875854 (301.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0vnet0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::fc54:ff:fe83:f7a0 prefixlen 64 scopeid 0x20<link> ether fe:54:00:83:f7:a0 txqueuelen 500 (Ethernet) RX packets 12 bytes 1162 (1.1 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 737 bytes 38921 (38.0 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0[root@data-1-1 tools]# |

把kvm也桥接到br0上

interface这里原先配置如下

|

1

2

3

4

5

6

|

<interface type='network'> <mac address='52:54:00:83:f7:a0'/> <source network='default'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/></interface> |

改成如下配置

virsh edit CentOS-7-x86_64

|

1

2

3

4

5

6

|

<interface type='bridge'> <mac address='52:54:00:83:f7:a0'/> <source bridge='br0'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/></interface> |

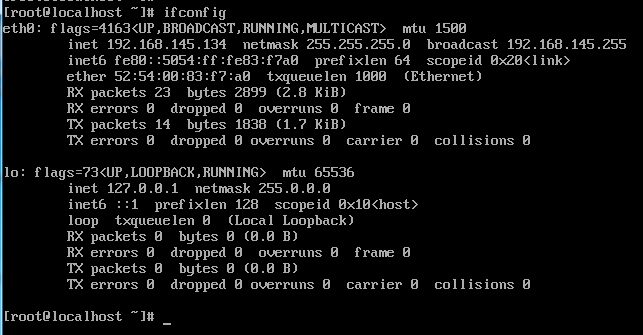

重启kvm,使用vnc登录,看到ip地址已经重新获取到了

可以修改网卡配置文件改成静态地址

此时已经可以使用xshell从笔记本登录此kvm机器了

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@localhost ~]# ip ad1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:83:f7:a0 brd ff:ff:ff:ff:ff:ff inet 192.168.145.134/24 brd 192.168.145.255 scope global dynamic eth0 valid_lft 1643sec preferred_lft 1643sec inet6 fe80::5054:ff:fe83:f7a0/64 scope link valid_lft forever preferred_lft forever[root@localhost ~]# |

esxi默认就是桥接的,,没这么复杂

kvm这里复杂,所以灵活

KVM的优化部分

3个方面,cpu、内存、IO,优化点不多,介绍下相关概念

cpu的优化部分

ring0内核态,级别高,可以直接操作硬件

ring0,用户态,级别低,无法直接操作硬件,如想写硬盘,切换到内核态执行

这是一种上下文切换

客户机不知道自己是否工作在内核态

vt-x,可以帮你进行上下文切换,加速上下文切换

kvm是个进程,需要被cpu调度,cpu有缓存,为了访问速度更快

kvm可以被调度到任何cpu上执行

这个kvm这时候在cpu1上运行,有了缓存

下一刻跑到了cpu2上执行,这就是cache miss

把kvm绑定到某个cpu上,命中率就搞了,提供性能

taskset绑定进程到某个或者某几个cpu上

绑定之后,性能能提高不到10%

一般一个cpu多核心,它们的缓存是共享的

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

[root@data-1-1 ~]# taskset --helpUsage: taskset [options] [mask | cpu-list] [pid|cmd [args...]]Options: -a, --all-tasks operate on all the tasks (threads) for a given pid -p, --pid operate on existing given pid -c, --cpu-list display and specify cpus in list format -h, --help display this help -V, --version output version informationThe default behavior is to run a new command: taskset 03 sshd -b 1024You can retrieve the mask of an existing task: taskset -p 700Or set it: taskset -p 03 700List format uses a comma-separated list instead of a mask: taskset -pc 0,3,7-11 700Ranges in list format can take a stride argument: e.g. 0-31:2 is equivalent to mask 0x55555555For more information see taskset(1).[root@data-1-1 ~]# |

使用ps aux | grep kvm命令查看到当前kvm虚拟机进程号是8598,我把它绑定到1号cpu上运行

|

1

2

3

4

|

[root@data-1-1 ~]# taskset -cp 1 8598pid 8598's current affinity list: 0-3pid 8598's new affinity list: 1[root@data-1-1 ~]# |

把它绑定到1号和2号运行,让它只能在这两个cpu之间运行

|

1

2

3

4

|

[root@data-1-1 ~]# taskset -cp 1,2 8598pid 8598's current affinity list: 1pid 8598's new affinity list: 1,2[root@data-1-1 ~]# |

内存优化

1、宿主机bios打开EPT功能,加快地址映射

2、宿主机系统配置大页内存,这样寻址快一点(使用大页内存虚拟机性能提高10%以上)

3、打开内存合并

EPT为了提升虚拟化内存映射的效率而提供的一项技术。

打开EPT后,GuestOS运行时,通过页表转化出来的地址不再是真实的物理地址,而是被称作为guest-physical addresses,经过EPT的转化后才成为真实的物理地址。

可以使用 $cat /proc/cpuinfo | grep ept检查硬件是否支持ept机制。如果支持那么kvm会自动的利用EPT。

intel开发的ept技术加快地址映射。bios打开这个功能就行

现在宿主机的是进行内存的合并。把连续的内存合并为2MB的大页内存

减少内存碎片

|

1

2

3

|

[root@data-1-1 ~]# cat /sys/kernel/mm/transparent_hugepage/enabled[always] madvise never[root@data-1-1 ~]# |

大页内存,这里默认是2MB

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@data-1-1 ~]# cat /proc/meminfo | tail -10VmallocChunk: 34359451736 kBHardwareCorrupted: 0 kBAnonHugePages: 362496 kBHugePages_Total: 0HugePages_Free: 0HugePages_Rsvd: 0HugePages_Surp: 0Hugepagesize: 2048 kBDirectMap4k: 85824 kBDirectMap2M: 4108288 kB[root@data-1-1 ~]# |

IO的优化

关于IO缓存模式的优化

参照下面博文,版权归原作者所有。

http://blog.chinaunix.net/uid-20940095-id-3371268.html

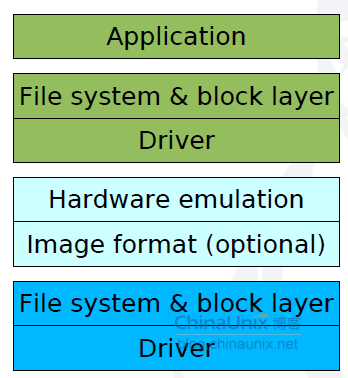

2. kvm 存储栈的原理

从上图的storage stack中可以看出有些模块都是double的,比如:

(1)两层file system: guest file system 和 host file system

(2)两层的page cache:guest和host中分别有针对文件的page cache

(3)两层的IO scheduler

鉴于这么多的冗余结构,因此,可以采用适当的优化来提高io的性能。主要的优化方法包括:

(1)采用virtio驱动代替ide驱动,目前kvm使用的就是virtio

(2)禁用host层的page cache

(3)开启huge page,开启大页内存

(4)禁用ksm

virtio半虚拟化I/O设备框架,标准化guest与host之间数据交换接口,简化流程,减少内存拷贝,提升虚拟机I/O效率

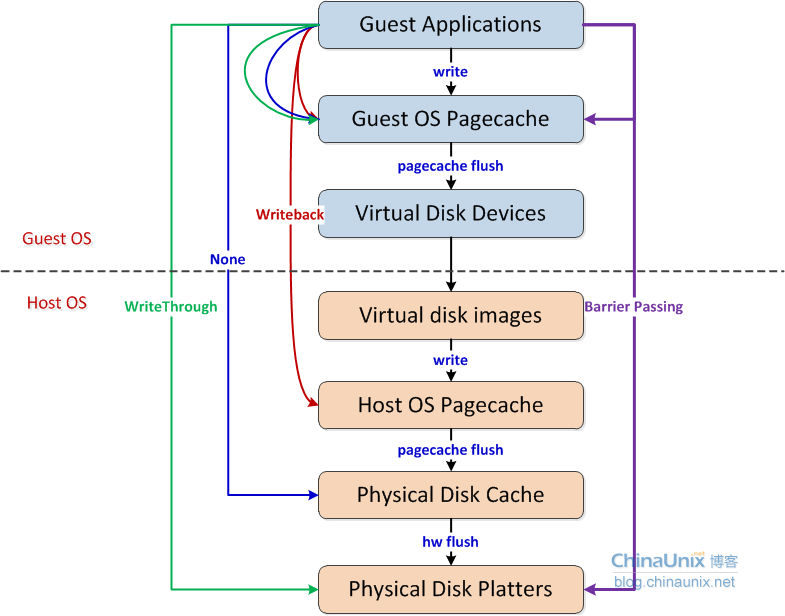

3. host层对kvm image文件io的cache方式

kvm通过三个参数来制定host对image文件的io cache方式:none,writeback和writethrough,下图详细的阐述了三种不同的cache方式的区别

从图中可以清晰的看到,writeback采用了guest和host两层的page cache,也就是说同一个文件会存在两份cache,这基本是没有必要的。

none和writethrough都会绕过host层的page cache。kvm默认的cache方式是writethrouh,这种方式不会是最安全的,不会造成数据的不一致性,但是性能也是最差的。综合数据的安全性和性能,建议选择none模式。

但是,随着barrier passing技术的出现,writeback也能保证数据的一致性,所以,如果采用raw格式的image,建议选择none,如果采用qcow2格式的image,建议选择writeback。

详细了解也可以参照下面博文

http://blog.sina.com.cn/s/blog_5ff8e88e0101bjmb.html

http://chuansong.me/n/2187028

关于IO的调度算法的优化

如果你的磁盘是ssd的话,一定要设置noop

noop就是为了缓存,闪存设备配置的

现在有3种,在centos7默认是deadline

|

1

2

3

|

[root@data-1-1 ~]# cat /sys/block/sda/queue/schedulernoop [deadline] cfq[root@data-1-1 ~]# |

可以通过下面方式修改的调度算法

|

1

2

3

4

5

|

[root@data-1-1 ~]# echo noop > /sys/block/sda/queue/scheduler[root@data-1-1 ~]# cat /sys/block/sda/queue/scheduler[noop] deadline cfq[root@data-1-1 ~]# echo deadline > /sys/block/sda/queue/scheduler[root@data-1-1 ~]# cat /sys/block/sda/queue/scheduler |

deadline算法

centos7默认的算法

读多写少,对数据库支持好,但是只要使用ssd,设置为noop即可

深入了解调度算法,可以参照下面博文

http://www.cnblogs.com/kongzhongqijing/articles/5786002.html

结尾部分

修改桥接为永久生效的,上述桥接操作,让宿主机的eth0桥接到br0上是临时生效的,加入重启宿主机,就失效了。

之前使用的brctl命令来自于下面包

|

1

2

3

4

5

|

[root@data-1-1 network-scripts]# which brctl/usr/sbin/brctl[root@data-1-1 network-scripts]# rpm -qf /usr/sbin/brctlbridge-utils-1.5-9.el7.x86_64[root@data-1-1 network-scripts]# |

创建了一个ifcfg-br0文件,然后更改eth0的配置文件,桥接上去

我如下修改之后,重启network服务,发现无法登录,ip ad看到ip地址没生效,重启机器才可以连接,再次远程登录,重启network服务没出现过连接不上的情况,后面需要留意下

下面是改动的地方配置情况

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@data-1-1 network-scripts]# rpm -qf /usr/sbin/brctlbridge-utils-1.5-9.el7.x86_64[root@data-1-1 network-scripts]# pwd/etc/sysconfig/network-scripts[root@data-1-1 network-scripts]# cat ifcfg-eth0TYPE=EthernetNAME=eth0DEVICE=eth0ONBOOT=yesBRIDGE=br0[root@data-1-1 network-scripts]# cat ifcfg-br0TYPE=BridgeBOOTPROTO=staticDEVICE=br0ONBOOT=yesIPADDR=192.168.145.133NETMASK=255.255.255.0GATEWAY=192.168.145.2NAME=br0[root@data-1-1 network-scripts]# |

关于网卡桥接

|

1

2

3

4

5

6

7

8

9

|

[root@data-1-1 network-scripts]# virsh start CentOS-7-x86_64Domain CentOS-7-x86_64 started[root@data-1-1 network-scripts]# brctl showbridge name bridge id STP enabled interfacesbr0 8000.000c29a71724 no eth0 vnet0virbr0 8000.5254002430ec yes virbr0-nic[root@data-1-1 network-scripts]# |

知识补充

1、kvm的autostart,设置虚拟机随着宿主机开机启动的方法

|

1

2

3

4

5

6

7

8

9

|

[root@data-1-1 network-scripts]# virsh list Id Name State---------------------------------------------------- 1 CentOS-7-x86_64 running[root@data-1-1 network-scripts]# virsh autostart CentOS-7-x86_64Domain CentOS-7-x86_64 marked as autostarted[root@data-1-1 network-scripts]# |